Back to Blog

How to upscale videos using AI with 20% bandwidth savings

How to upscale videos using AI with 20% bandwidth savings

AI video upscaling combines neural preprocessing with traditional encoders to achieve 20% bandwidth savings while improving quality. Modern solutions like SimaBit reduce bitrates by 22% while increasing VMAF scores by 4.2 points, processing 1080p frames in under 16 milliseconds for real-time applications.

At a Glance

• AI preprocessing acts as an intelligent filter before encoding, predicting perceptual redundancies to achieve 22% bandwidth reduction without quality loss

• Super-resolution technology enables encoding at 720p with AI reconstruction to 1080p or higher at playback, cutting file sizes dramatically

• Frame interpolation reduces bandwidth needs by predicting intermediate frames instead of encoding native high frame rates

• SimaBit processes frames in 16ms, enabling real-time preprocessing for both live streaming and VOD workflows

• Implementation delivers 25% operational cost reduction through lower CDN fees and reduced re-transcoding needs

• Solutions work seamlessly with existing H.264, HEVC, and AV1 pipelines without hardware upgrades

AI video upscaling is reshaping streaming economics. By preprocessing frames with neural models, teams can ship lower-bitrate files that reconstruct as razor-sharp HD or 4K on playback, often cutting bandwidth about 20% while enhancing viewer quality.

Why AI Video Upscaling and Bandwidth Savings Now Matter

The urgency for efficient video delivery has never been greater. Video traffic comprises 82% of all IP traffic, while the global media streaming market races toward $285.4 billion by 2034.

This explosive growth creates a critical challenge: how to deliver higher quality video without overwhelming network infrastructure or ballooning CDN costs. The answer lies in AI-powered preprocessing that fundamentally changes the bandwidth equation. Modern solutions achieve 22% or more bandwidth reduction while simultaneously improving visual quality: a combination previously thought impossible.

The technology works by acting as an intelligent pre-filter for encoders. Rather than simply compressing video, AI models predict perceptual redundancies and reconstruct fine detail after compression. This approach delivers immediate cost benefits beyond just bandwidth savings. Smaller files mean leaner CDN bills, fewer re-transcodes, and lower energy consumption across the entire delivery chain.

How Do Super-Resolution, Frame Interpolation & Neural Codecs Work?

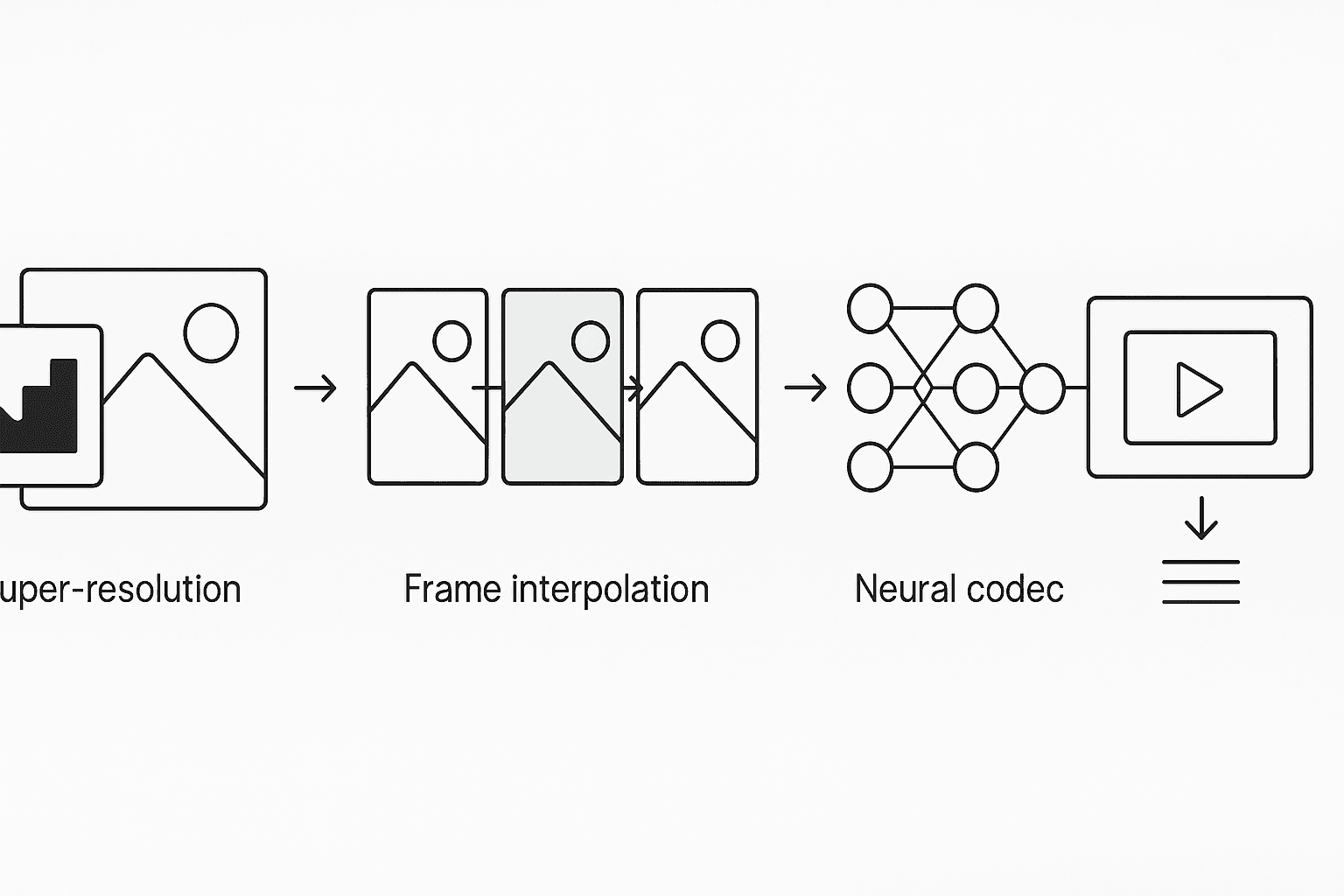

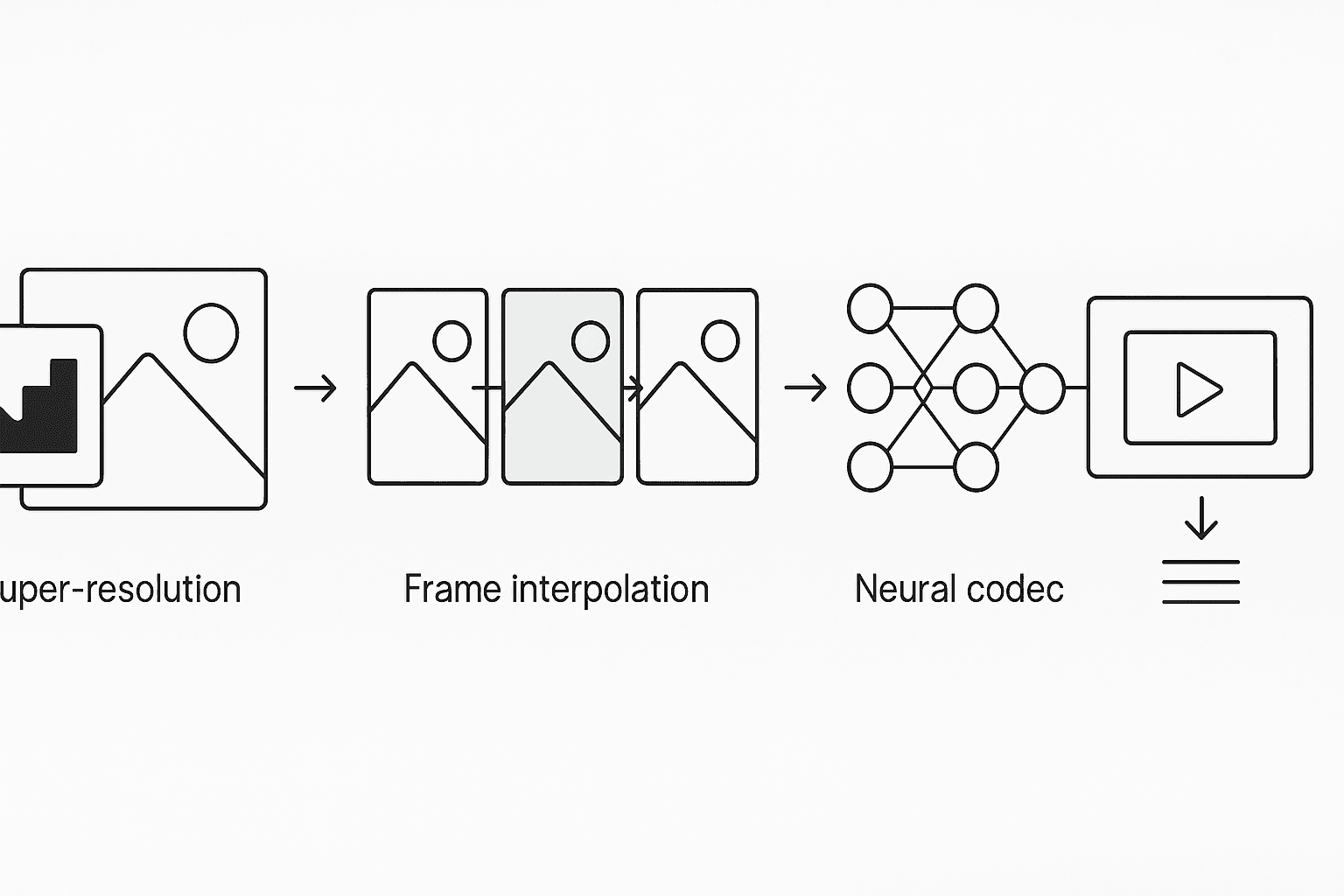

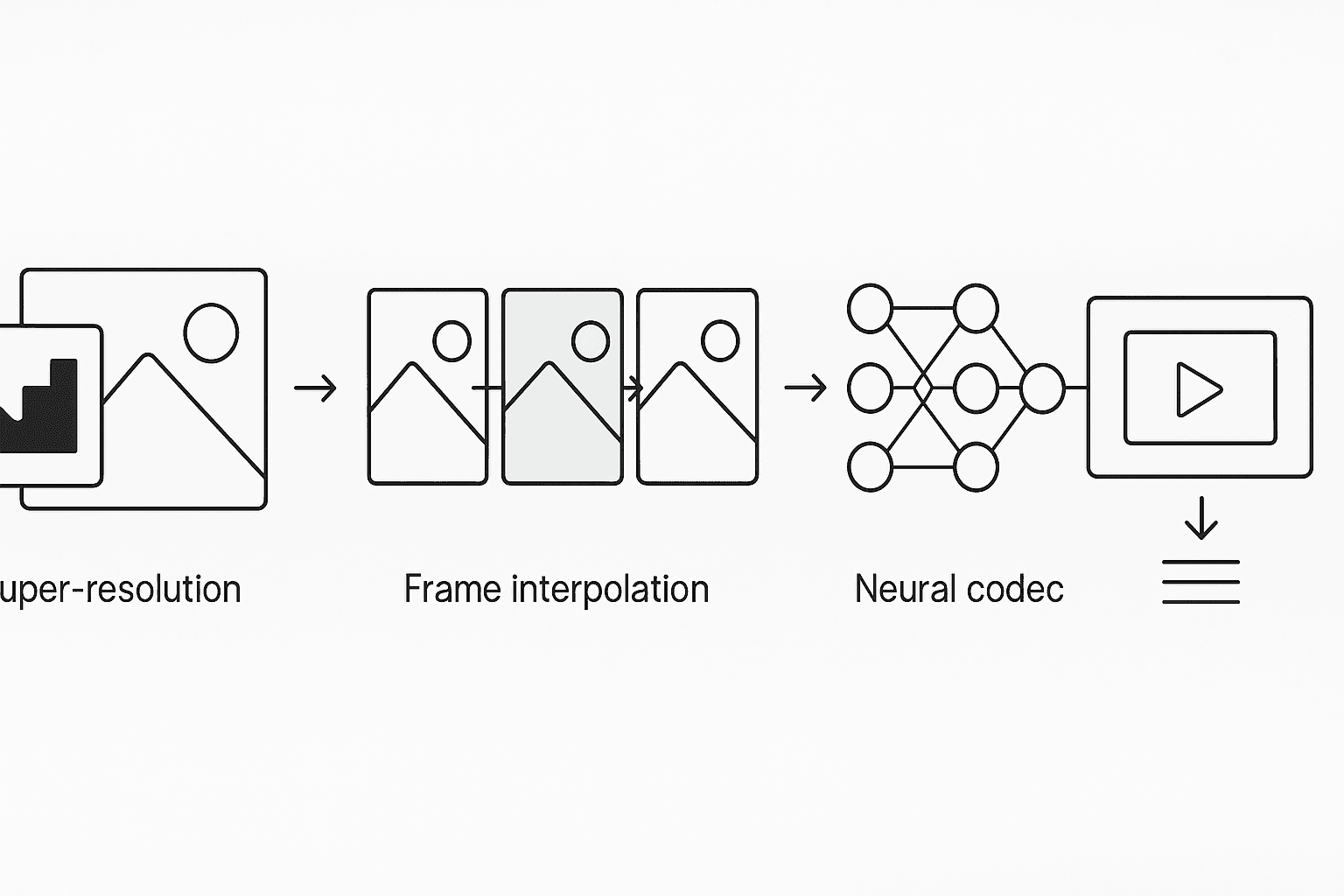

Three core AI techniques power modern video enhancement: super-resolution, frame interpolation, and neural preprocessing. Each addresses different aspects of the quality-bandwidth challenge.

Super-resolution uses spatial-temporal cues to upsample from lower resolutions. Instead of encoding at native 4K, you can encode at 720p and let neural networks reconstruct the detail at playback. The AI analyzes motion patterns and texture information across multiple frames to estimate crisp detail beyond the original pixel count.

Frame interpolation predicts intermediate frames between existing ones using machine learning models trained on millions of video sequences. This technique sidesteps the bandwidth demands of native high frame rates. A 60fps video requires roughly double the data of 30fps, while 120fps can quadruple requirements.

Neural codecs represent the cutting edge, with models achieving balance between inference speed and visual fidelity. These systems dynamically adapt to available hardware resources, processing frames in real-time while maintaining broadcast-quality output. The trained models convert to ONNX format for broad compatibility while maintaining low latency essential for streaming applications.

Picking the Right Toolchain: SimaUpscale, SimaBit & Topaz Video AI

Selecting the optimal AI engine depends on your specific workflow requirements, budget constraints, and quality targets.

SimaUpscale boosts resolution instantly from 2× to 4× with seamless quality preservation, making it ideal for real-time applications requiring ultra-high fidelity. The technology integrates seamlessly with existing pipelines while delivering crystal-clear streaming for high-impact scenarios like live sports and concerts.

SimaBit takes a different approach as a codec-agnostic preprocessing engine. The technology achieves 22% average reduction in bitrate while delivering a 4.2-point VMAF quality increase: a rare combination of bandwidth savings and quality improvement. It processes 1080p frames in under 16 milliseconds, making it suitable for both live streaming and VOD workflows.

Topaz Video denoise low-light footage and upscales old archival videos to 4K, starting at $25 per month. The software uses AI to achieve sharper results than standard tools allow, with one user noting: "Amazing software for enhancing and upscaling video and footage in bad condition. A real gem even with zero technical knowledge."

What Is the Step-by-Step Workflow to Deliver 20 % Leaner Streams?

Implementing AI upscaling requires careful integration of preprocessing, encoding, and quality assurance steps.

Start with content-adaptive encoding if you're not already using it. SimaBit processes frames in under 16 milliseconds, allowing real-time preprocessing before your encoder. The AI analyzes each frame to identify areas where bitrate can be reduced without impacting perceived quality.

For encoding configuration, use longer GOPs of 5-10 seconds depending on segment duration. For VOD content, implement 2-pass VBR with a 200% constraint. Reserve CBR only for live streaming or tightly managed bandwidth scenarios where predictability matters more than efficiency.

Quality verification requires careful attention to metrics. Target VMAF scores of 93-95 for premium content and 85-92 for general streaming. The Lanczos filter delivers better quality in lower bitrate rungs at no additional cost, making it essential for maximizing efficiency.

For AV1 implementation, SVT-AV1 offers 2-5× faster encoding than libaom-AV1 with solid compression efficiency. Slower presets at the same CRF yield 5-15% better quality (VMAF) and smaller files, though encoding time increases proportionally.

How Do You Prove Success with VMAF, PSNR & Cost Savings?

Measuring AI upscaling effectiveness requires both objective quality metrics and real-world cost analysis.

SimaBit's benchmark results demonstrate the potential: 22% average bitrate reduction paired with a 4.2-point VMAF quality increase. This translates to a 37% decrease in buffering events: directly improving viewer experience while reducing infrastructure load.

For PSNR measurements, AI models achieve 40% improvement in peak signal-to-noise ratio and 35% enhancement in Structural Similarity Index (SSIM) across various test videos. User studies show a 75% increase in viewer satisfaction scores for AI-enhanced videos compared to their original versions.

The cost impact extends beyond bandwidth. AI-powered workflows cut operational costs by up to 25% through reduced CDN fees, fewer re-transcodes, and lower energy consumption. Dynamic AI engines anticipate bandwidth shifts and trim buffering by up to 50% while sustaining resolution, ensuring viewers get consistent quality regardless of network conditions.

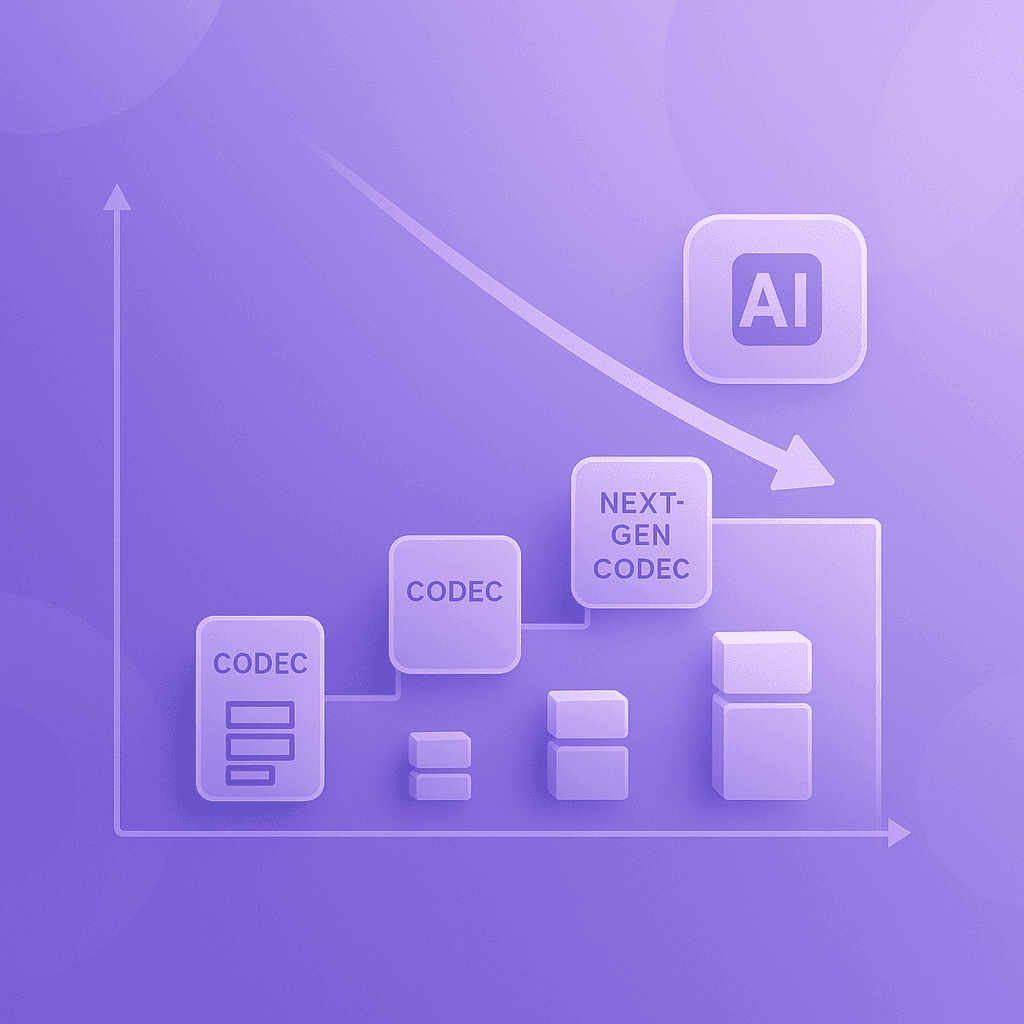

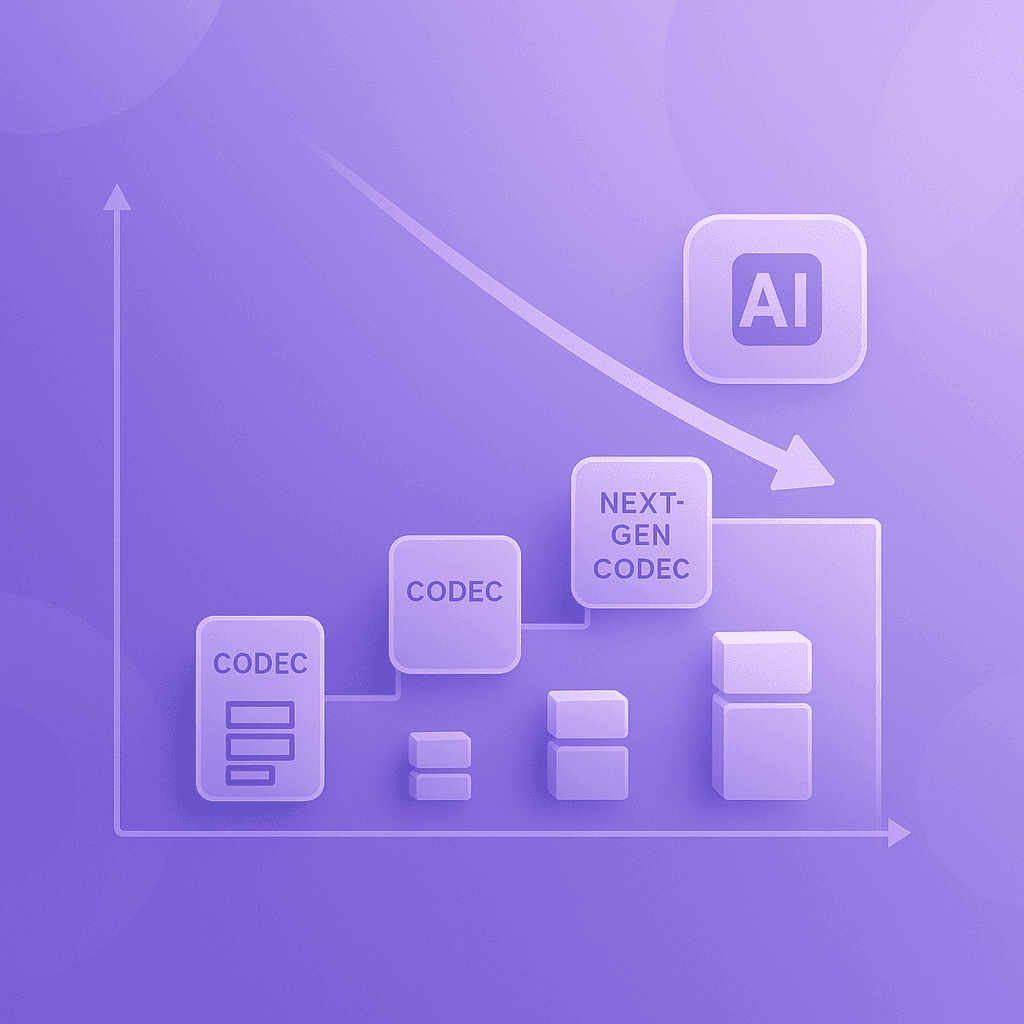

Will AV2 & Learned Compression Rewrite the Rules Again?

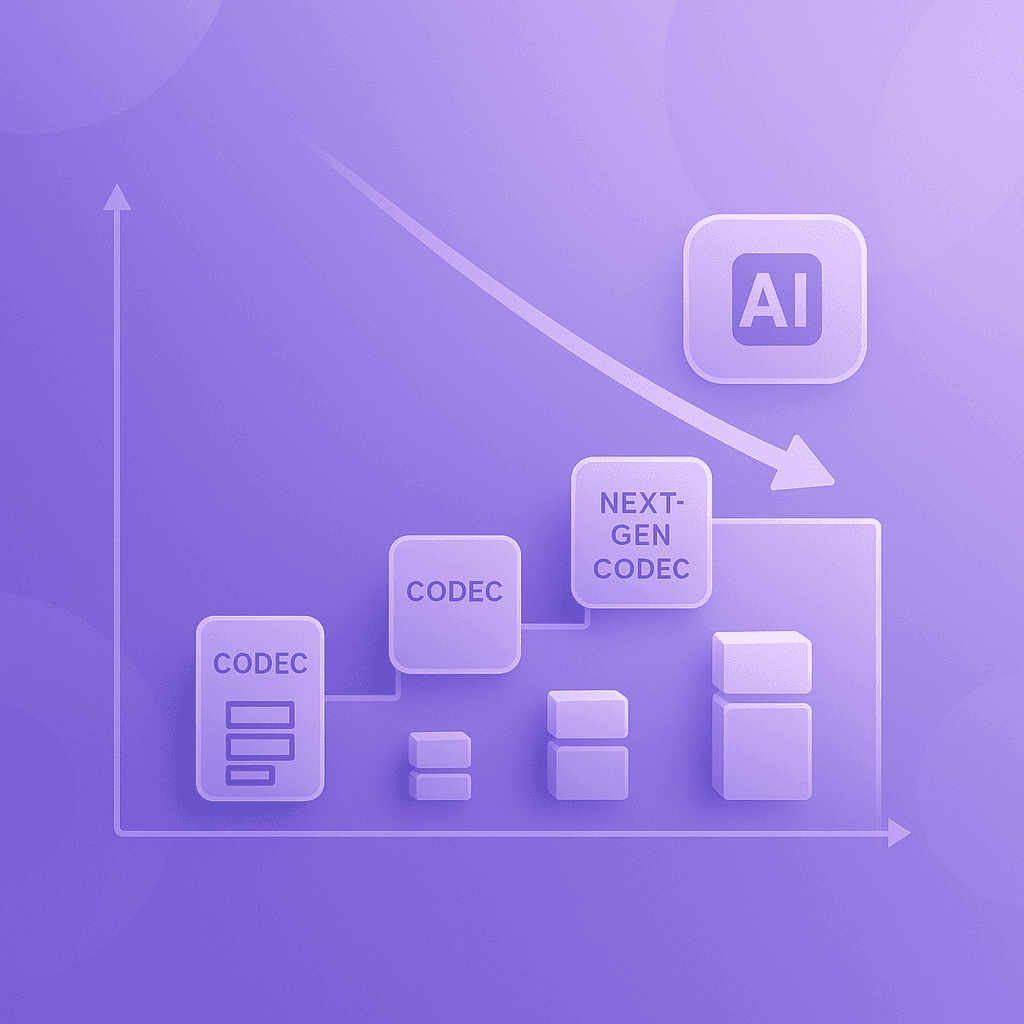

The codec landscape continues evolving, with AV2 poised to deliver another generational leap in compression efficiency.

Early benchmarks show AV2 achieving 28.6% bitrate reduction for equivalent PSNR-YUV and 32.6% reduction based on VMAF compared to AV1. Some configurations demonstrate up to 35.71% improvement in VMAF scores, promising the same picture quality using 30% less bandwidth.

Adoption appears imminent, with 53% of Alliance members planning to adopt AV2 within 12 months of final release, and 88% expecting implementation within two years. The codec introduces enhanced support for AR/VR applications, split-screen delivery, and improved screen content handling.

AI preprocessing remains valuable even as new codecs emerge. SimaBit's codec-agnostic approach ensures compatibility with H.264, HEVC, AV1, and emerging AV2 standards without workflow disruption. When combined with AV2, early tests indicate total bitrate reduction reaching 30% while maintaining broadcast quality.

What Pitfalls Should You Avoid Before You Push Play?

Successful AI upscaling deployment requires awareness of common implementation challenges.

Traditional techniques struggle with critical challenges like motion artifacts and temporal inconsistencies, especially in real-time environments. Optical flow estimation with modern AI models results in a 60% reduction in motion artifacts compared to traditional methods, but only when properly configured.

Existing deep learning-based upscaling methods suffer from high computational cost and limited real-time processing capabilities. The key is selecting models that dynamically adapt to available hardware resources rather than requiring fixed GPU configurations.

Processing time remains a consideration for non-real-time workflows. Typical social media clips of 15-30 seconds at 1080p take 10-45 minutes depending on hardware and quality settings. Plan your pipeline accordingly, potentially using faster presets for initial reviews and slower settings for final delivery.

Key Takeaways on AI Video Upscaling & Bandwidth Efficiency

AI video upscaling has evolved from experimental technology to production-ready infrastructure that delivers measurable benefits across the entire video delivery chain.

The combination of better video quality and lower bandwidth requirements isn't theoretical: it's happening now. Teams are achieving 20%+ bandwidth savings while improving VMAF scores, reducing buffering events, and cutting operational costs by up to 25%.

SimaUpscale delivers instant resolution boosts from 2× to 4× with seamless quality preservation, while SimaBit provides codec-agnostic preprocessing that works with your existing pipeline. Whether you're streaming live events, delivering VOD content, or preparing for next-generation codecs, AI upscaling offers a path to better quality at lower cost.

The technology continues advancing rapidly, but the core value proposition remains clear: deliver exceptional viewing experiences while dramatically reducing infrastructure costs. For teams looking to stay competitive in the streaming landscape, AI video upscaling isn't just an option: it's becoming essential infrastructure.

Frequently Asked Questions

What is AI video upscaling?

AI video upscaling uses neural models to preprocess video frames, allowing lower-bitrate files to reconstruct as high-quality HD or 4K during playback, enhancing viewer experience while saving bandwidth.

How does AI video upscaling save bandwidth?

AI video upscaling saves bandwidth by preprocessing video frames to predict perceptual redundancies, allowing for lower bitrate files that maintain high visual quality, reducing CDN costs and network load.

What are the benefits of using SimaUpscale and SimaBit?

SimaUpscale provides instant resolution boosts from 2× to 4× with seamless quality preservation, while SimaBit offers codec-agnostic preprocessing, achieving significant bitrate reduction and quality improvement, making them ideal for various streaming applications.

How does AI preprocessing work with new codecs like AV2?

AI preprocessing, such as SimaBit, remains valuable with new codecs like AV2 by ensuring compatibility and enhancing compression efficiency, achieving up to 30% total bitrate reduction while maintaining broadcast quality.

What challenges should be considered when implementing AI upscaling?

Challenges include managing motion artifacts and computational costs. Proper configuration and selecting adaptable models are crucial to overcoming these issues and ensuring efficient real-time processing.

Sources

https://www.simalabs.ai/resources/best-practices-codec-integration-h264-hevc-av1-2025

https://www.simalabs.ai/resources/best-real-time-genai-video-enhancement-engines-october-2025

https://www.simalabs.ai/resources/how-generative-ai-video-models-enhance-streaming-q-c9ec72f0

https://link.springer.com/article/10.1007/s10586-025-05538-z

https://www.simalabs.ai/resources/ai-enhanced-ugc-streaming-2030-av2-edge-gpu-simabit

https://jisem-journal.com/index.php/journal/article/view/6540

How to upscale videos using AI with 20% bandwidth savings

AI video upscaling combines neural preprocessing with traditional encoders to achieve 20% bandwidth savings while improving quality. Modern solutions like SimaBit reduce bitrates by 22% while increasing VMAF scores by 4.2 points, processing 1080p frames in under 16 milliseconds for real-time applications.

At a Glance

• AI preprocessing acts as an intelligent filter before encoding, predicting perceptual redundancies to achieve 22% bandwidth reduction without quality loss

• Super-resolution technology enables encoding at 720p with AI reconstruction to 1080p or higher at playback, cutting file sizes dramatically

• Frame interpolation reduces bandwidth needs by predicting intermediate frames instead of encoding native high frame rates

• SimaBit processes frames in 16ms, enabling real-time preprocessing for both live streaming and VOD workflows

• Implementation delivers 25% operational cost reduction through lower CDN fees and reduced re-transcoding needs

• Solutions work seamlessly with existing H.264, HEVC, and AV1 pipelines without hardware upgrades

AI video upscaling is reshaping streaming economics. By preprocessing frames with neural models, teams can ship lower-bitrate files that reconstruct as razor-sharp HD or 4K on playback, often cutting bandwidth about 20% while enhancing viewer quality.

Why AI Video Upscaling and Bandwidth Savings Now Matter

The urgency for efficient video delivery has never been greater. Video traffic comprises 82% of all IP traffic, while the global media streaming market races toward $285.4 billion by 2034.

This explosive growth creates a critical challenge: how to deliver higher quality video without overwhelming network infrastructure or ballooning CDN costs. The answer lies in AI-powered preprocessing that fundamentally changes the bandwidth equation. Modern solutions achieve 22% or more bandwidth reduction while simultaneously improving visual quality: a combination previously thought impossible.

The technology works by acting as an intelligent pre-filter for encoders. Rather than simply compressing video, AI models predict perceptual redundancies and reconstruct fine detail after compression. This approach delivers immediate cost benefits beyond just bandwidth savings. Smaller files mean leaner CDN bills, fewer re-transcodes, and lower energy consumption across the entire delivery chain.

How Do Super-Resolution, Frame Interpolation & Neural Codecs Work?

Three core AI techniques power modern video enhancement: super-resolution, frame interpolation, and neural preprocessing. Each addresses different aspects of the quality-bandwidth challenge.

Super-resolution uses spatial-temporal cues to upsample from lower resolutions. Instead of encoding at native 4K, you can encode at 720p and let neural networks reconstruct the detail at playback. The AI analyzes motion patterns and texture information across multiple frames to estimate crisp detail beyond the original pixel count.

Frame interpolation predicts intermediate frames between existing ones using machine learning models trained on millions of video sequences. This technique sidesteps the bandwidth demands of native high frame rates. A 60fps video requires roughly double the data of 30fps, while 120fps can quadruple requirements.

Neural codecs represent the cutting edge, with models achieving balance between inference speed and visual fidelity. These systems dynamically adapt to available hardware resources, processing frames in real-time while maintaining broadcast-quality output. The trained models convert to ONNX format for broad compatibility while maintaining low latency essential for streaming applications.

Picking the Right Toolchain: SimaUpscale, SimaBit & Topaz Video AI

Selecting the optimal AI engine depends on your specific workflow requirements, budget constraints, and quality targets.

SimaUpscale boosts resolution instantly from 2× to 4× with seamless quality preservation, making it ideal for real-time applications requiring ultra-high fidelity. The technology integrates seamlessly with existing pipelines while delivering crystal-clear streaming for high-impact scenarios like live sports and concerts.

SimaBit takes a different approach as a codec-agnostic preprocessing engine. The technology achieves 22% average reduction in bitrate while delivering a 4.2-point VMAF quality increase: a rare combination of bandwidth savings and quality improvement. It processes 1080p frames in under 16 milliseconds, making it suitable for both live streaming and VOD workflows.

Topaz Video denoise low-light footage and upscales old archival videos to 4K, starting at $25 per month. The software uses AI to achieve sharper results than standard tools allow, with one user noting: "Amazing software for enhancing and upscaling video and footage in bad condition. A real gem even with zero technical knowledge."

What Is the Step-by-Step Workflow to Deliver 20 % Leaner Streams?

Implementing AI upscaling requires careful integration of preprocessing, encoding, and quality assurance steps.

Start with content-adaptive encoding if you're not already using it. SimaBit processes frames in under 16 milliseconds, allowing real-time preprocessing before your encoder. The AI analyzes each frame to identify areas where bitrate can be reduced without impacting perceived quality.

For encoding configuration, use longer GOPs of 5-10 seconds depending on segment duration. For VOD content, implement 2-pass VBR with a 200% constraint. Reserve CBR only for live streaming or tightly managed bandwidth scenarios where predictability matters more than efficiency.

Quality verification requires careful attention to metrics. Target VMAF scores of 93-95 for premium content and 85-92 for general streaming. The Lanczos filter delivers better quality in lower bitrate rungs at no additional cost, making it essential for maximizing efficiency.

For AV1 implementation, SVT-AV1 offers 2-5× faster encoding than libaom-AV1 with solid compression efficiency. Slower presets at the same CRF yield 5-15% better quality (VMAF) and smaller files, though encoding time increases proportionally.

How Do You Prove Success with VMAF, PSNR & Cost Savings?

Measuring AI upscaling effectiveness requires both objective quality metrics and real-world cost analysis.

SimaBit's benchmark results demonstrate the potential: 22% average bitrate reduction paired with a 4.2-point VMAF quality increase. This translates to a 37% decrease in buffering events: directly improving viewer experience while reducing infrastructure load.

For PSNR measurements, AI models achieve 40% improvement in peak signal-to-noise ratio and 35% enhancement in Structural Similarity Index (SSIM) across various test videos. User studies show a 75% increase in viewer satisfaction scores for AI-enhanced videos compared to their original versions.

The cost impact extends beyond bandwidth. AI-powered workflows cut operational costs by up to 25% through reduced CDN fees, fewer re-transcodes, and lower energy consumption. Dynamic AI engines anticipate bandwidth shifts and trim buffering by up to 50% while sustaining resolution, ensuring viewers get consistent quality regardless of network conditions.

Will AV2 & Learned Compression Rewrite the Rules Again?

The codec landscape continues evolving, with AV2 poised to deliver another generational leap in compression efficiency.

Early benchmarks show AV2 achieving 28.6% bitrate reduction for equivalent PSNR-YUV and 32.6% reduction based on VMAF compared to AV1. Some configurations demonstrate up to 35.71% improvement in VMAF scores, promising the same picture quality using 30% less bandwidth.

Adoption appears imminent, with 53% of Alliance members planning to adopt AV2 within 12 months of final release, and 88% expecting implementation within two years. The codec introduces enhanced support for AR/VR applications, split-screen delivery, and improved screen content handling.

AI preprocessing remains valuable even as new codecs emerge. SimaBit's codec-agnostic approach ensures compatibility with H.264, HEVC, AV1, and emerging AV2 standards without workflow disruption. When combined with AV2, early tests indicate total bitrate reduction reaching 30% while maintaining broadcast quality.

What Pitfalls Should You Avoid Before You Push Play?

Successful AI upscaling deployment requires awareness of common implementation challenges.

Traditional techniques struggle with critical challenges like motion artifacts and temporal inconsistencies, especially in real-time environments. Optical flow estimation with modern AI models results in a 60% reduction in motion artifacts compared to traditional methods, but only when properly configured.

Existing deep learning-based upscaling methods suffer from high computational cost and limited real-time processing capabilities. The key is selecting models that dynamically adapt to available hardware resources rather than requiring fixed GPU configurations.

Processing time remains a consideration for non-real-time workflows. Typical social media clips of 15-30 seconds at 1080p take 10-45 minutes depending on hardware and quality settings. Plan your pipeline accordingly, potentially using faster presets for initial reviews and slower settings for final delivery.

Key Takeaways on AI Video Upscaling & Bandwidth Efficiency

AI video upscaling has evolved from experimental technology to production-ready infrastructure that delivers measurable benefits across the entire video delivery chain.

The combination of better video quality and lower bandwidth requirements isn't theoretical: it's happening now. Teams are achieving 20%+ bandwidth savings while improving VMAF scores, reducing buffering events, and cutting operational costs by up to 25%.

SimaUpscale delivers instant resolution boosts from 2× to 4× with seamless quality preservation, while SimaBit provides codec-agnostic preprocessing that works with your existing pipeline. Whether you're streaming live events, delivering VOD content, or preparing for next-generation codecs, AI upscaling offers a path to better quality at lower cost.

The technology continues advancing rapidly, but the core value proposition remains clear: deliver exceptional viewing experiences while dramatically reducing infrastructure costs. For teams looking to stay competitive in the streaming landscape, AI video upscaling isn't just an option: it's becoming essential infrastructure.

Frequently Asked Questions

What is AI video upscaling?

AI video upscaling uses neural models to preprocess video frames, allowing lower-bitrate files to reconstruct as high-quality HD or 4K during playback, enhancing viewer experience while saving bandwidth.

How does AI video upscaling save bandwidth?

AI video upscaling saves bandwidth by preprocessing video frames to predict perceptual redundancies, allowing for lower bitrate files that maintain high visual quality, reducing CDN costs and network load.

What are the benefits of using SimaUpscale and SimaBit?

SimaUpscale provides instant resolution boosts from 2× to 4× with seamless quality preservation, while SimaBit offers codec-agnostic preprocessing, achieving significant bitrate reduction and quality improvement, making them ideal for various streaming applications.

How does AI preprocessing work with new codecs like AV2?

AI preprocessing, such as SimaBit, remains valuable with new codecs like AV2 by ensuring compatibility and enhancing compression efficiency, achieving up to 30% total bitrate reduction while maintaining broadcast quality.

What challenges should be considered when implementing AI upscaling?

Challenges include managing motion artifacts and computational costs. Proper configuration and selecting adaptable models are crucial to overcoming these issues and ensuring efficient real-time processing.

Sources

https://www.simalabs.ai/resources/best-practices-codec-integration-h264-hevc-av1-2025

https://www.simalabs.ai/resources/best-real-time-genai-video-enhancement-engines-october-2025

https://www.simalabs.ai/resources/how-generative-ai-video-models-enhance-streaming-q-c9ec72f0

https://link.springer.com/article/10.1007/s10586-025-05538-z

https://www.simalabs.ai/resources/ai-enhanced-ugc-streaming-2030-av2-edge-gpu-simabit

https://jisem-journal.com/index.php/journal/article/view/6540

How to upscale videos using AI with 20% bandwidth savings

AI video upscaling combines neural preprocessing with traditional encoders to achieve 20% bandwidth savings while improving quality. Modern solutions like SimaBit reduce bitrates by 22% while increasing VMAF scores by 4.2 points, processing 1080p frames in under 16 milliseconds for real-time applications.

At a Glance

• AI preprocessing acts as an intelligent filter before encoding, predicting perceptual redundancies to achieve 22% bandwidth reduction without quality loss

• Super-resolution technology enables encoding at 720p with AI reconstruction to 1080p or higher at playback, cutting file sizes dramatically

• Frame interpolation reduces bandwidth needs by predicting intermediate frames instead of encoding native high frame rates

• SimaBit processes frames in 16ms, enabling real-time preprocessing for both live streaming and VOD workflows

• Implementation delivers 25% operational cost reduction through lower CDN fees and reduced re-transcoding needs

• Solutions work seamlessly with existing H.264, HEVC, and AV1 pipelines without hardware upgrades

AI video upscaling is reshaping streaming economics. By preprocessing frames with neural models, teams can ship lower-bitrate files that reconstruct as razor-sharp HD or 4K on playback, often cutting bandwidth about 20% while enhancing viewer quality.

Why AI Video Upscaling and Bandwidth Savings Now Matter

The urgency for efficient video delivery has never been greater. Video traffic comprises 82% of all IP traffic, while the global media streaming market races toward $285.4 billion by 2034.

This explosive growth creates a critical challenge: how to deliver higher quality video without overwhelming network infrastructure or ballooning CDN costs. The answer lies in AI-powered preprocessing that fundamentally changes the bandwidth equation. Modern solutions achieve 22% or more bandwidth reduction while simultaneously improving visual quality: a combination previously thought impossible.

The technology works by acting as an intelligent pre-filter for encoders. Rather than simply compressing video, AI models predict perceptual redundancies and reconstruct fine detail after compression. This approach delivers immediate cost benefits beyond just bandwidth savings. Smaller files mean leaner CDN bills, fewer re-transcodes, and lower energy consumption across the entire delivery chain.

How Do Super-Resolution, Frame Interpolation & Neural Codecs Work?

Three core AI techniques power modern video enhancement: super-resolution, frame interpolation, and neural preprocessing. Each addresses different aspects of the quality-bandwidth challenge.

Super-resolution uses spatial-temporal cues to upsample from lower resolutions. Instead of encoding at native 4K, you can encode at 720p and let neural networks reconstruct the detail at playback. The AI analyzes motion patterns and texture information across multiple frames to estimate crisp detail beyond the original pixel count.

Frame interpolation predicts intermediate frames between existing ones using machine learning models trained on millions of video sequences. This technique sidesteps the bandwidth demands of native high frame rates. A 60fps video requires roughly double the data of 30fps, while 120fps can quadruple requirements.

Neural codecs represent the cutting edge, with models achieving balance between inference speed and visual fidelity. These systems dynamically adapt to available hardware resources, processing frames in real-time while maintaining broadcast-quality output. The trained models convert to ONNX format for broad compatibility while maintaining low latency essential for streaming applications.

Picking the Right Toolchain: SimaUpscale, SimaBit & Topaz Video AI

Selecting the optimal AI engine depends on your specific workflow requirements, budget constraints, and quality targets.

SimaUpscale boosts resolution instantly from 2× to 4× with seamless quality preservation, making it ideal for real-time applications requiring ultra-high fidelity. The technology integrates seamlessly with existing pipelines while delivering crystal-clear streaming for high-impact scenarios like live sports and concerts.

SimaBit takes a different approach as a codec-agnostic preprocessing engine. The technology achieves 22% average reduction in bitrate while delivering a 4.2-point VMAF quality increase: a rare combination of bandwidth savings and quality improvement. It processes 1080p frames in under 16 milliseconds, making it suitable for both live streaming and VOD workflows.

Topaz Video denoise low-light footage and upscales old archival videos to 4K, starting at $25 per month. The software uses AI to achieve sharper results than standard tools allow, with one user noting: "Amazing software for enhancing and upscaling video and footage in bad condition. A real gem even with zero technical knowledge."

What Is the Step-by-Step Workflow to Deliver 20 % Leaner Streams?

Implementing AI upscaling requires careful integration of preprocessing, encoding, and quality assurance steps.

Start with content-adaptive encoding if you're not already using it. SimaBit processes frames in under 16 milliseconds, allowing real-time preprocessing before your encoder. The AI analyzes each frame to identify areas where bitrate can be reduced without impacting perceived quality.

For encoding configuration, use longer GOPs of 5-10 seconds depending on segment duration. For VOD content, implement 2-pass VBR with a 200% constraint. Reserve CBR only for live streaming or tightly managed bandwidth scenarios where predictability matters more than efficiency.

Quality verification requires careful attention to metrics. Target VMAF scores of 93-95 for premium content and 85-92 for general streaming. The Lanczos filter delivers better quality in lower bitrate rungs at no additional cost, making it essential for maximizing efficiency.

For AV1 implementation, SVT-AV1 offers 2-5× faster encoding than libaom-AV1 with solid compression efficiency. Slower presets at the same CRF yield 5-15% better quality (VMAF) and smaller files, though encoding time increases proportionally.

How Do You Prove Success with VMAF, PSNR & Cost Savings?

Measuring AI upscaling effectiveness requires both objective quality metrics and real-world cost analysis.

SimaBit's benchmark results demonstrate the potential: 22% average bitrate reduction paired with a 4.2-point VMAF quality increase. This translates to a 37% decrease in buffering events: directly improving viewer experience while reducing infrastructure load.

For PSNR measurements, AI models achieve 40% improvement in peak signal-to-noise ratio and 35% enhancement in Structural Similarity Index (SSIM) across various test videos. User studies show a 75% increase in viewer satisfaction scores for AI-enhanced videos compared to their original versions.

The cost impact extends beyond bandwidth. AI-powered workflows cut operational costs by up to 25% through reduced CDN fees, fewer re-transcodes, and lower energy consumption. Dynamic AI engines anticipate bandwidth shifts and trim buffering by up to 50% while sustaining resolution, ensuring viewers get consistent quality regardless of network conditions.

Will AV2 & Learned Compression Rewrite the Rules Again?

The codec landscape continues evolving, with AV2 poised to deliver another generational leap in compression efficiency.

Early benchmarks show AV2 achieving 28.6% bitrate reduction for equivalent PSNR-YUV and 32.6% reduction based on VMAF compared to AV1. Some configurations demonstrate up to 35.71% improvement in VMAF scores, promising the same picture quality using 30% less bandwidth.

Adoption appears imminent, with 53% of Alliance members planning to adopt AV2 within 12 months of final release, and 88% expecting implementation within two years. The codec introduces enhanced support for AR/VR applications, split-screen delivery, and improved screen content handling.

AI preprocessing remains valuable even as new codecs emerge. SimaBit's codec-agnostic approach ensures compatibility with H.264, HEVC, AV1, and emerging AV2 standards without workflow disruption. When combined with AV2, early tests indicate total bitrate reduction reaching 30% while maintaining broadcast quality.

What Pitfalls Should You Avoid Before You Push Play?

Successful AI upscaling deployment requires awareness of common implementation challenges.

Traditional techniques struggle with critical challenges like motion artifacts and temporal inconsistencies, especially in real-time environments. Optical flow estimation with modern AI models results in a 60% reduction in motion artifacts compared to traditional methods, but only when properly configured.

Existing deep learning-based upscaling methods suffer from high computational cost and limited real-time processing capabilities. The key is selecting models that dynamically adapt to available hardware resources rather than requiring fixed GPU configurations.

Processing time remains a consideration for non-real-time workflows. Typical social media clips of 15-30 seconds at 1080p take 10-45 minutes depending on hardware and quality settings. Plan your pipeline accordingly, potentially using faster presets for initial reviews and slower settings for final delivery.

Key Takeaways on AI Video Upscaling & Bandwidth Efficiency

AI video upscaling has evolved from experimental technology to production-ready infrastructure that delivers measurable benefits across the entire video delivery chain.

The combination of better video quality and lower bandwidth requirements isn't theoretical: it's happening now. Teams are achieving 20%+ bandwidth savings while improving VMAF scores, reducing buffering events, and cutting operational costs by up to 25%.

SimaUpscale delivers instant resolution boosts from 2× to 4× with seamless quality preservation, while SimaBit provides codec-agnostic preprocessing that works with your existing pipeline. Whether you're streaming live events, delivering VOD content, or preparing for next-generation codecs, AI upscaling offers a path to better quality at lower cost.

The technology continues advancing rapidly, but the core value proposition remains clear: deliver exceptional viewing experiences while dramatically reducing infrastructure costs. For teams looking to stay competitive in the streaming landscape, AI video upscaling isn't just an option: it's becoming essential infrastructure.

Frequently Asked Questions

What is AI video upscaling?

AI video upscaling uses neural models to preprocess video frames, allowing lower-bitrate files to reconstruct as high-quality HD or 4K during playback, enhancing viewer experience while saving bandwidth.

How does AI video upscaling save bandwidth?

AI video upscaling saves bandwidth by preprocessing video frames to predict perceptual redundancies, allowing for lower bitrate files that maintain high visual quality, reducing CDN costs and network load.

What are the benefits of using SimaUpscale and SimaBit?

SimaUpscale provides instant resolution boosts from 2× to 4× with seamless quality preservation, while SimaBit offers codec-agnostic preprocessing, achieving significant bitrate reduction and quality improvement, making them ideal for various streaming applications.

How does AI preprocessing work with new codecs like AV2?

AI preprocessing, such as SimaBit, remains valuable with new codecs like AV2 by ensuring compatibility and enhancing compression efficiency, achieving up to 30% total bitrate reduction while maintaining broadcast quality.

What challenges should be considered when implementing AI upscaling?

Challenges include managing motion artifacts and computational costs. Proper configuration and selecting adaptable models are crucial to overcoming these issues and ensuring efficient real-time processing.

Sources

https://www.simalabs.ai/resources/best-practices-codec-integration-h264-hevc-av1-2025

https://www.simalabs.ai/resources/best-real-time-genai-video-enhancement-engines-october-2025

https://www.simalabs.ai/resources/how-generative-ai-video-models-enhance-streaming-q-c9ec72f0

https://link.springer.com/article/10.1007/s10586-025-05538-z

https://www.simalabs.ai/resources/ai-enhanced-ugc-streaming-2030-av2-edge-gpu-simabit

https://jisem-journal.com/index.php/journal/article/view/6540

SimaLabs

©2025 Sima Labs. All rights reserved

SimaLabs

©2025 Sima Labs. All rights reserved

SimaLabs

©2025 Sima Labs. All rights reserved